Face.com offers a robust API for facial recognition. In short, you send it an image and it will process that image finding faces within it and return information about what it finds. That information can be used to locate features of the face as well as other characteristics of the face. These other characteristics include the age and gender as well as the presence of glasses or a smile.

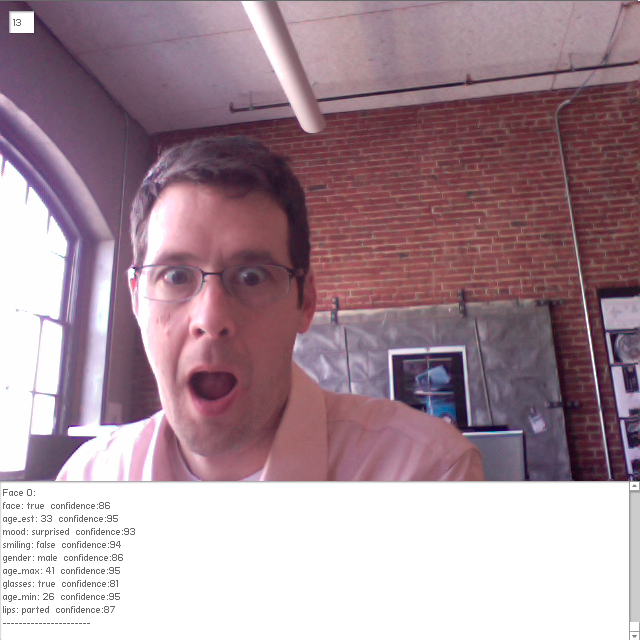

As a test, I built a standalone app that sends faces appearing in an attached web cam to the API for analysis.

First, I built a basic Flash AIR application to upload images to the face.com API. For this I used Jean Nawratil’s Flash ActionScript 3.0 Client Library and sent images each time a key was pressed on the keyboard. The only modification I made to Jean’s library was to add a parameter to the POST made to the API. The parameter (attributes=all) tells the API to return additional characteristics.

With this in place I integrated OpenCV to locally detect when a face is present in the frame. This way, I could automatically send images to the API, but only those with something for face.com to find — reducing the load on their servers (and my rate limit).

I’ve used an AS3 implementation of OpenCV in the past, but the performance was terrible. For this project, I used Wouter Verweirder’s AIR Native Extension which offloads the work to native code. It is very responsive.

Using a standard face cascade, OpenCV recognizes faces and indicates approximately where they are in the frame. Based on this detection, my app sends a copy of the image to face.com for further analysis. The round trip to the face.com API is quick and generally takes less than a second, two at the most. I added a timer to the app to further limit how often I send off an image to the API.

Below are some captures from this basic proof of concept. Below each are the characteristics detected by the API.

In addition to basic face detection, the face.com API allows you to identify and tag people based on images you use to train the algorithm and/or images in a Facebook account. For more information about the API and what it can do, be sure to check out their online demo.