Cala de Portlligat

Golden Gate Sunrise

Undefined!

Postcard

generative tiles (random)

tides

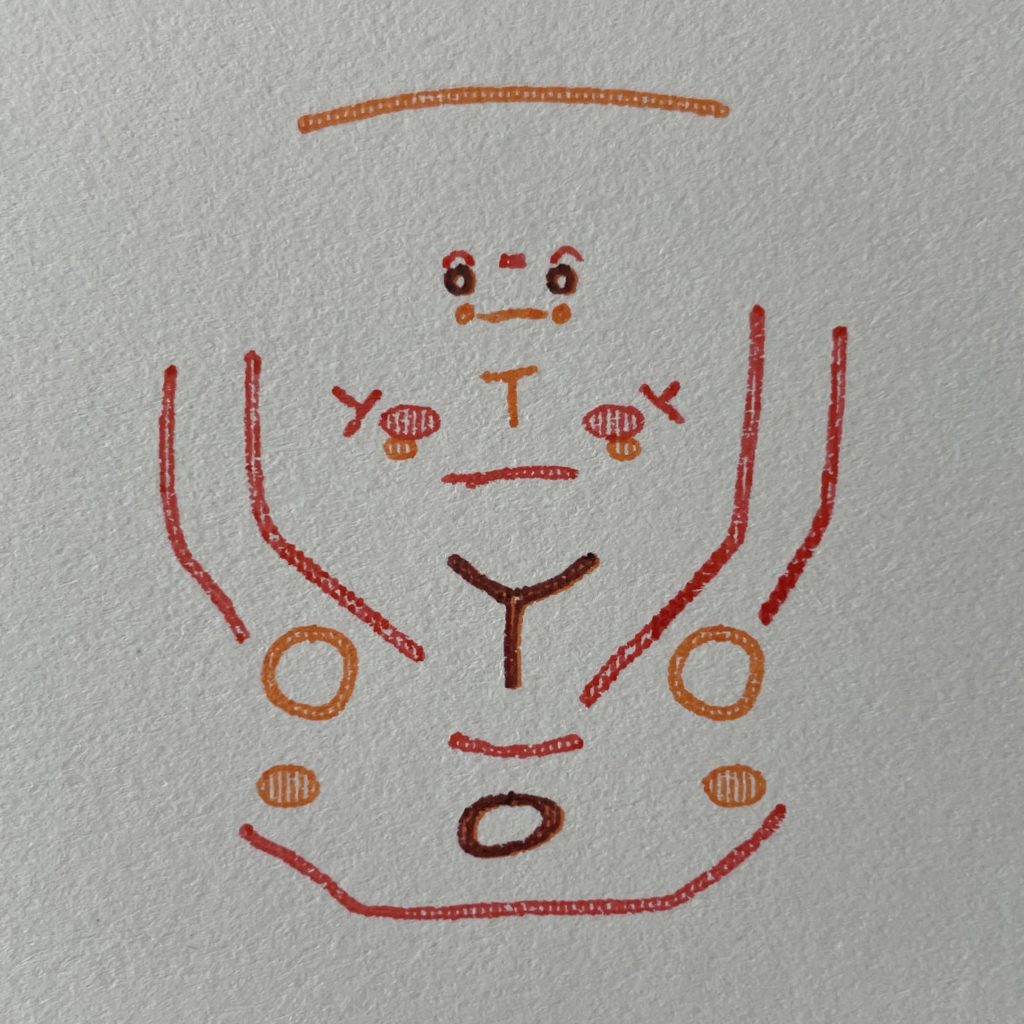

seismic flowers

This drawing is made using data from the largest earthquake every day for 379 days. The magnitude, depth, and continent that it occurred on are utilized. The intent is not to visualize the data for evaluation, but use it as a composition element.

The flowers are inspired by a traditional Japanese textile pattern.