Postcard

Automata kits

In addition to helping to update digital exhibits at the Exploratorium, I have been volunteering with them at the Sunday Streets SF fairs.

To provide something for kids to construct and take home, I designed two automata kits inspired by some examples I found online.

For variety, I used a rubber band to make a belt drive for the windmill kit. This had an added advantage of simplifying and speeding up the kit’s assembly. Glueing the wheel to the crank shaft (as is done in the rabbit kit) requires the maker to wait for the glue to dry before they can enjoy their toy.

All-in-all, the windmill was easier to assemble, primarily due to the coordination needed to fit all the tabs of the hat into the box for the rabbit kit.

Production of the kits involved a good deal of laser cutting and glueing as many pieces together ahead of time. I decided to assemble all the crank handles, turbine shafts, and rabbit plungers to minimize the amount of gluing at the fair. The image below shows all the pieces prepared for the first fair.

Make your own

If you have access to a laser cutter you can use the Illustrator files below to cut out most of the pieces, or you can cut your own by hand. If you are cutting your own, there is no need to follow the templates exactly, just use them as a rough guide and make simplifications and embellishments as you wish.

Aside from cardboard, you will need some rubber bands (I used size 64 from Office Depot) and some bamboo skewers (I got mine at Safeway).

generative tiles (random)

tides

seismic flowers

This drawing is made using data from the largest earthquake every day for 379 days. The magnitude, depth, and continent that it occurred on are utilized. The intent is not to visualize the data for evaluation, but use it as a composition element.

The flowers are inspired by a traditional Japanese textile pattern.

sf bay area currents

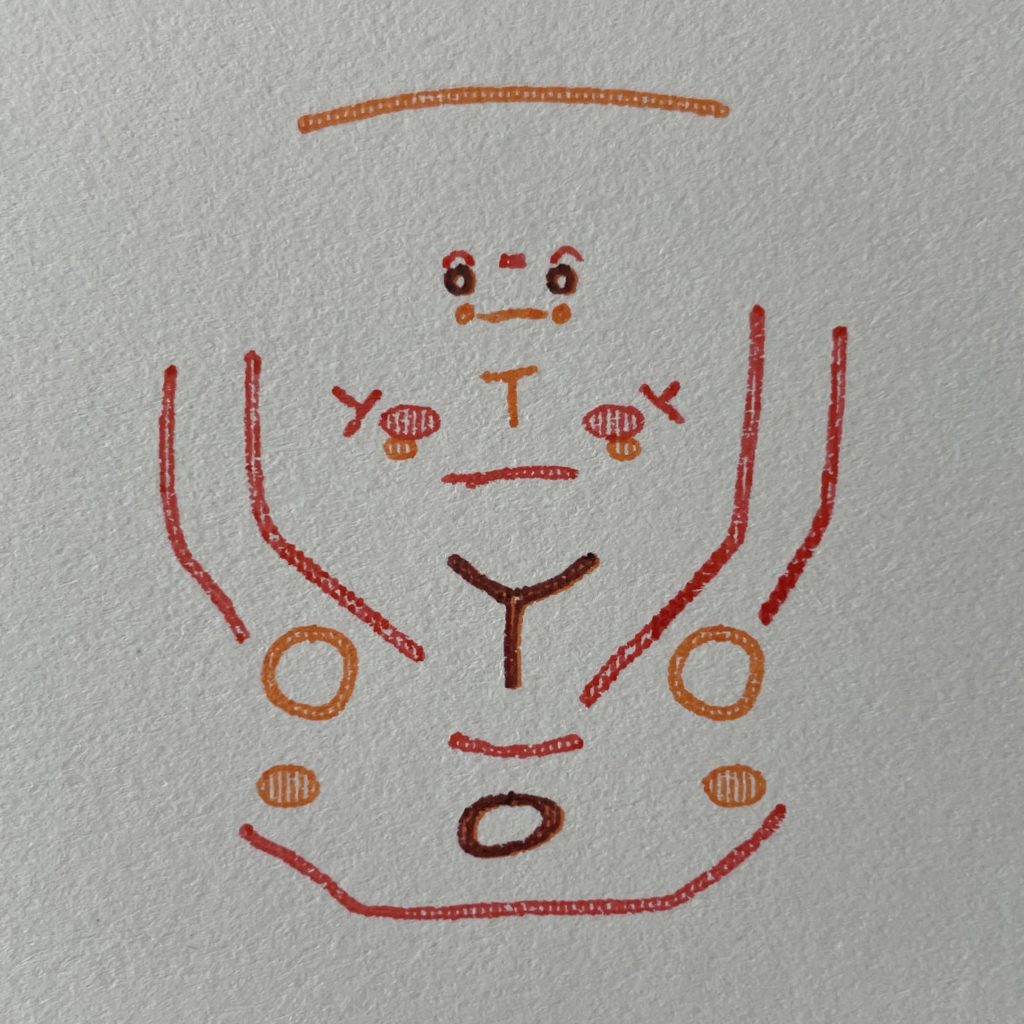

face generator

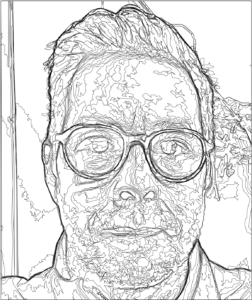

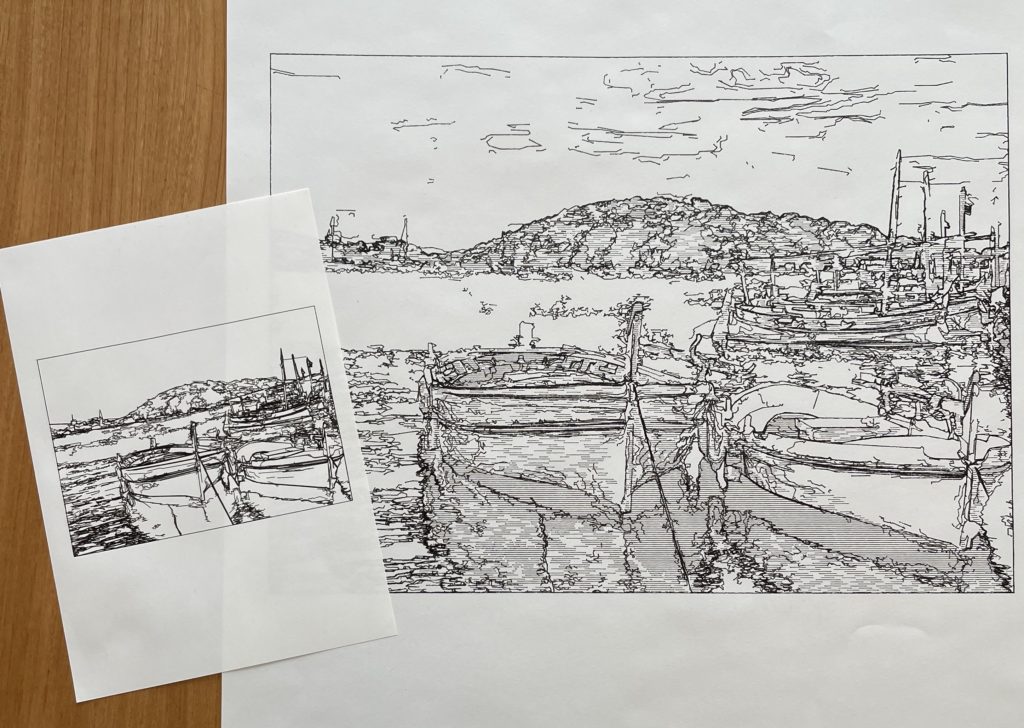

illustrating photos on a pen plotter

A couple times in the pass, I have looked into ways to algorithmically generate lines suitable for plotting based on an image. Now that I have finally built a plotter to test them out, I decided to take another pass.

As in previous cases, I started with OpenCV contours generated from threshold images, as seen in the image below.

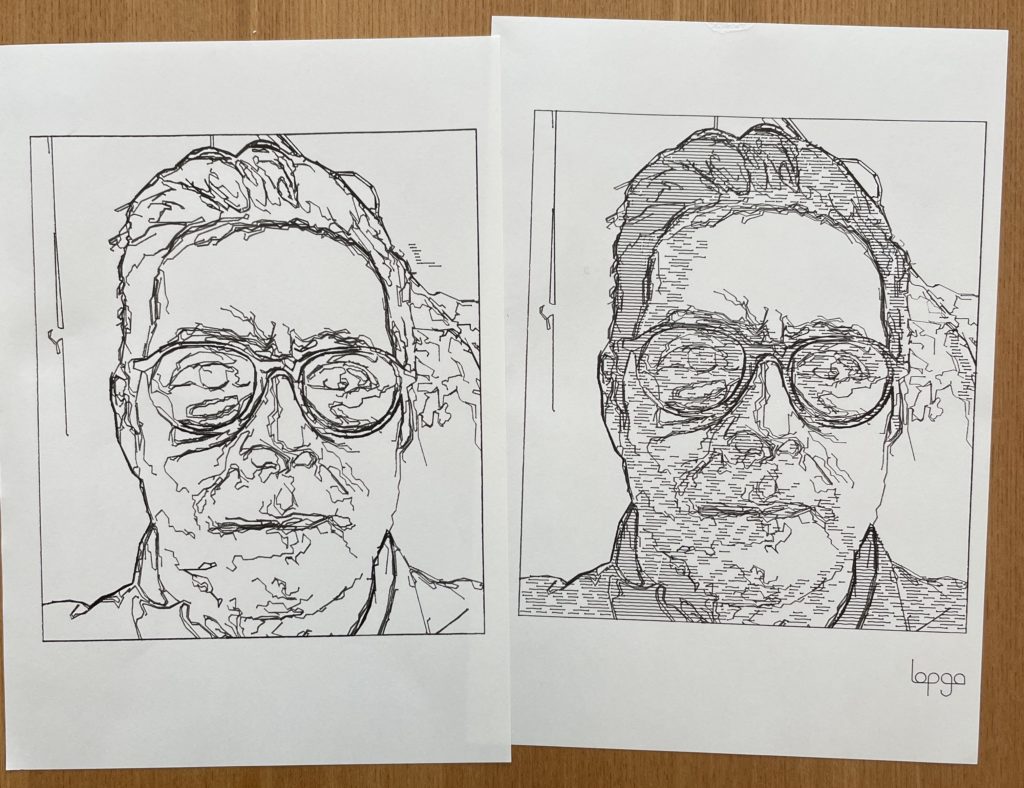

As it is, there is something compelling about this, but there is a lot of noise. The areas of the image where the contour lines stack up close to each other are areas where the brightness of the image change quickly. My assumption what that these represent good lines to focus on for the illustration and my first step was to filter out the other lines. Below is the result of keeping only points that are within a set range of another point. I also remove any of those points within range if they are really close to the point I am keeping. The ideal distances to use for this vary from image to image, but I was interested to see if there were some settings that worked fairly well for a variety of image. The image below is the result of these generic settings that work across many images.

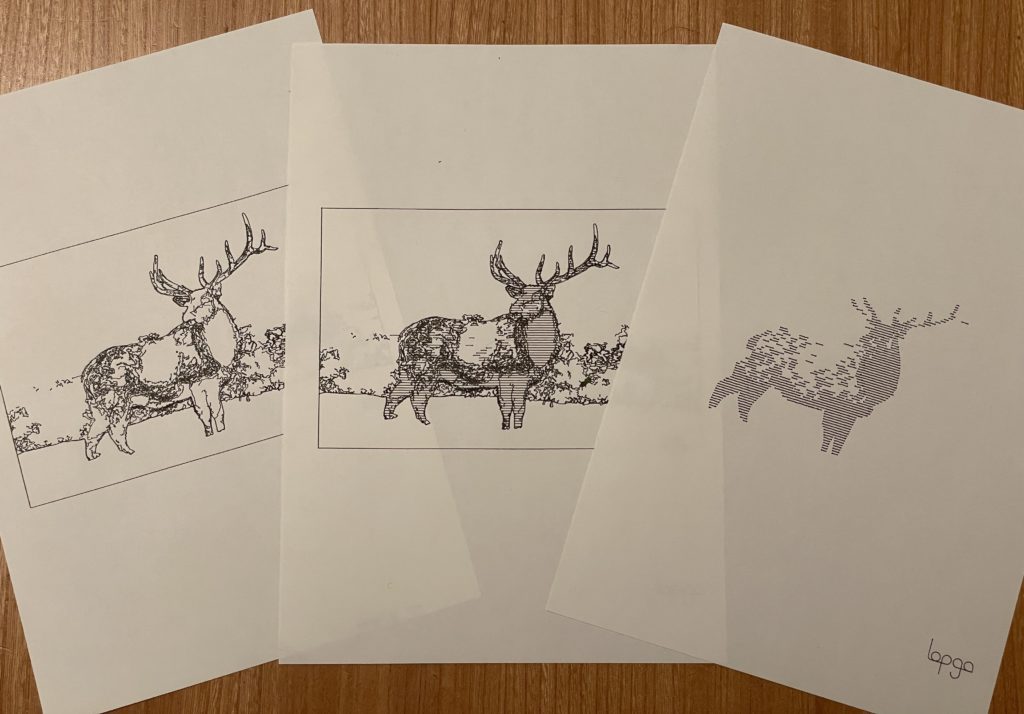

There are still quite a few extra lines here, so I wrote a few more filters to remove particularly jaggy points from the lines, remaining remaining fragments that are really short. Next, I merged any lines whose endpoints were particularly close to each other (to reduce unnecessary pen lifts) before making a final pass to remove lines that don’t much area of the illustration. The results of each of these filters is included below.

The final results are ok, but the number of paths is higher than I would like (263 paths in the example above). Filtering out more of the paths is possible, but key details start to get lost depending on the specific nature of the image being used. A genetic algorithm could be used to fine tune the filter settings for each image, or you could import the SVG into Inscape and just manually remove the lines you don’t like.

For now, I decided to toss in some some hatch shading and call it a successful experiment. Below are some plotted results with and without shading.

simple shape grammar

I’ve been working on a shape grammar that generates a sequence that can then be run through a genetic algorithm. These images were generated using two shapes and a simple set of rules. Parameters of the rules are randomized when the composition is created and saved to a sequence that will be later be integrated into a genetic algorithm.